“The rise of modern computing in massive memory intensive data analysis”

Early Computers in Data Analytics

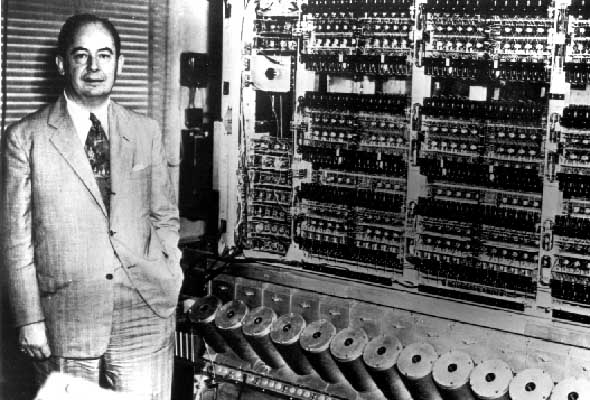

During World War II, mathematicians and physicists experienced the use of early computers for doing scientific calculations in their, although they were primitive in nature, it gave them the ability to analyze data faster. After the war, that experience lead to the development & emergence of the field of scientific computing (numerical linear matrix algebra). The field developed quickly and across the US, USSR, UK and other European countries. The first large-scale electronic computer: ENIAC (Electronic Numerical Integrator and Computer) which was first developed in 1945/1946 by John Mauchly and John Presper Eckert was commissioned by the US military. In picture: Dr. John Von Neumann with ENIAC.

The two ENIAC inventors went on to form Eckert-Mauchly Computer Corporation and in 1949, their company launched the BINAC (BINary Automatic) computer that used magnetic tape to store data. Remington Rand Corporation bought the Eckert-Mauchly Computer Corporation in 1950 and changed the name to the Univac Division of Remington Rand. Their research resulted in the UNIVAC (UNIVersal Automatic Computer), an important forerunner of today’s computers. Also Remington Rand merged with the Sperry Corporation in 1955 to form Sperry-Rand. Sperry-Rand then merged with Burroughs Corporation to become Unisys.

Spread of Computer Use in Data Analytics

The spread of computers for data analytics purposes were mainly for scientific, computing & engineering use. Soon after the war, Argonne National Laboratory (ANL) was established in 1946. ANL is a science and engineering research national laboratory operated by the University of Chicago, Argonne for the United States Department of Energy. ANL’s Physics Division was built and completed in 1953. It’s first early digital computer the AVIDAC (Argonne Version of the Institute’s Digital Automatic Computer), was based on the IAS (Institute for Advanced Study) computer architecture developed by John von Neumann.

Lawrence Livermore National Laboratory (LLNL), a federal research facility in Livermore, California, founded by the University of California in 1952, was a pioneer in the adoption of computers for doing scientific computing which involved heavy data analysis. It is a Federally Funded Research and Development Center, by the United States Department of Energy. LLNL used a Remington-Rand UNIVAC 1 (Universal Automatic Computer) in 1953. LLNL’s principal responsibility is ensuring the safety, security and reliability of the US nuclear weapons through the application of advanced science, engineering and technology. In consequent years, LLNL used more powerful computers from IBM like the 70XX series since their data collection had increased many folds from then on.

The European Organization for Nuclear Research (CERN) was also established two years after LLNL in 1954. CERN’s first computer, a huge vacuum-tube Ferranti Mercury, was installed in 1958 but it was a million times slower than today’s big computers. It was replaced in 1960 by the more powerful IBM 709 computer which were adopted by LLNL 5 years earlier.

Other nuclear research facilities in the US were established in the 1960s particularly the Stanford Linear Accelerator Center (SLAC) in 1962 & Fermi Lab in 1967. SLAC installed IBM System/360 model 91 from IBM in June of 1965 for doing high energy particle physics data analysis.

Computer languages & Development of Matrix Algebra

In order to advance mathematical computing for analysing massive datasets, computer languages needed to be developed. IBM developed the computer language called Fortran (derived from Formula Translating System) is a general-purpose, imperative programming language which targets numeric computation and scientific computing. It was originally developed in the 1950s for scientific and engineering applications, Fortran dominates this area of programming early on and has been in continuous use to date for over half a century in computationally intensive areas such as numerical weather prediction, computational fluid dynamics, particle & computational physics and computational chemistry. It is also a popular language in the area of high-performance computing (HPC) and it is still in use today. HPC is what defined the big massive data analysis of today.

The specification called BLAS (Basic Linear Algebra Subprograms) which prescribes a set of low-level routines for performing common linear algebra operations such as vector addition, scalar multiplication, dot products, linear combinations, and matrix multiplication emerged in the late 1970s. The majority of linear algebra routines such as AMD Core Math Library (ACML), ATLAS, Intel Math Kernel Library (MKL), to name a few do conform to BLAS which enable users to develop programs that are agnostic of the BLAS library.

The software library LINPACK (LINear equations software PACKage) was developed in Fortran for the purpose of performing numerical linear algebra for heavy data analysis tasks in digital computers by late 1970s. The authors of LINPACK, Jack Dongarra, Cleve Moler, et al wrote it for use on supercomputers. To date LINPACK has been largely superseded by LAPACK (Linear Algebra Package), which runs more efficiently on modern computer architectures. Cleve Moler went on to found MathWorks which develops commercial analytic software for high end data analysis today called Matlab.

There are both open source & commercial softwares available today that are built on top of BLAS-compatible libraries such as LAPACK, LINPACK, GNU Octave, Mathematica, MATLAB, NumPy and R which are popular in data analysis today. The Google search engine algorithm PageRank is solved via power iteration matrix methods. When someone conducts a Google search, his/her return results were computed using math routines described in this section.

Internet & The Rise of Modern Computing in Massive Memory Intensive Data Analysis

As stated in the first section of this article, massive data analysis tasks were solely the domain of physicists, mathematicians & engineers until about mid 1990s when the internet was already invented at CERN in 1989 by Tim Berners-Lee. Major internet search engine companies emerged from the mid 1990s onward, such as Yahoo, Teoma, Ask.com, Baidu, Google. The age of big data was born because most businesses from then on started conducting their businesses on the internet & world citizens engaged socially on the internet. This means that the data they collected accumulated much faster than ever before.

Doing massive data analysis today to get meaningful insights about the data is no longer the monopoly domain of physicists & engineers, but it is now a field that is pervasive in the business domains as well. With a must have capability in today’s businesses environment, the technology to store, retrieve & analyze big data is now readily available today.

Data Science is now an inter-disciplinary domain comprised of knowledge from Computing, Statistics, Physics, Mathematics, Economics/Finance, and Engineering to tackle Big Data as we know it.

Hope you enjoyed reading this second part of Evolution of Data Science series. Speak with us today to find out how our interdisciplinary Data Science team analyzes big data in the TV domain.

– Sione K. Palu, Data Scientist